Fake Evidence, Real Money: How AI Tools Are Fueling a New Wave of Fraud

Summary

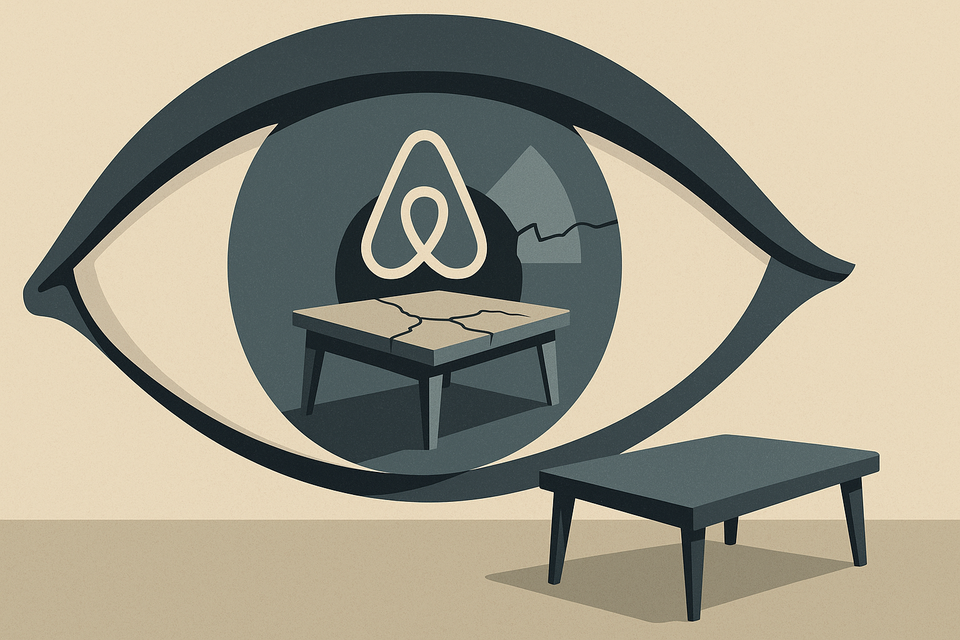

From fake coffee table cracks on Airbnb to deepfake CFO meetings, AI tools are now being used to fabricate “evidence” across industries.

The kicker? Most platforms still can’t tell real from generated.

Here’s a breakdown of how fraudsters are using generative AI — and who’s catching them.

Trust the Image? Pay the Price

If you’ve ever thought, “AI can’t really hurt me,” try losing $25 million because your CFO’s face was cloned in a Zoom call.

No, this isn’t Black Mirror. It’s 2025.

AI-generated fraud is no longer some abstract legal theory or dystopian thinkpiece fodder. It’s a practical tool in the hands of people who want to get refunds, claim insurance, or — you know — wire themselves millions using fake executive avatars. And it’s working. Until it’s not.

So let’s talk about AI-fueled evidence fraud.

Because your cracked coffee table might not be real. But the invoice definitely is.

Cross-Industry AI Fraud Cases: The Receipts

| Year | Sector | AI Trick Used | What They Tried | How It Was Caught |

|---|---|---|---|---|

| 2025 | Airbnb | AI-generated damage photos | £12,000 damage claim | Guest spotted photo inconsistencies; media picked it up |

| 2024 | Zurich UK | Stolen salvage yard photo w/ fake plate | False auto claim | Metadata anomalies |

| 2025 | Hong Kong firm | Deepfake CFO video call | $25M transfer request | Voice/behavior mismatch in follow-up call |

| 2025 | eBay | Fake image of shattered phone | Refund scam | Seller found undamaged phone listed by buyer |

| 2025 | Lacoste | AI-generated fake tags | Counterfeit return | AI scanner caught false logos |

(Full table below. Spoiler: It gets worse.)

What’s Going On Here?

1. Fraud-as-a-Service is Now a Thing

You no longer need to know Photoshop or write Python.

Just type: “Crack on coffee table, 4K, looks real.” Voilà.

Even ChatGPT’s image tools can now make receipts that look authentic — until they forget how commas work in Euro-style pricing.

2. Humans Still Do All the Work

Despite all the AI hype, most of these cases were caught not by AI, but by humans — adjusters, guests, journalists, random Reddit detectives.

Turns out, “I have a weird feeling about this JPEG” is still a top-tier forensic tool.

3. Platforms Are Losing Control

Airbnb sided with the fraudster at first.

eBay nearly paid out a scam.

Even insurers with AI detection systems needed a person to override them.

And deepfake conference calls? Yeah, your finance team better like in-person coffee meetings again.

The Systemic Gap

Here’s the scary part:

AI reduces the cost of faking evidence to near-zero. But the cost of proving it’s fake is still high — both in time and technical skill.

So unless platforms and regulators close that gap, we’ll keep seeing:

- fake invoices → real payouts

- fake receipts → real refunds

- fake videos → real lawsuits

- fake damage photos → real cleaning fees

And yes, real anxiety about staying in an Airbnb ever again.

How Some Are Fighting Back

The good news? Some defenses are working:

- Zurich Insurance: AI + human examiners = stronger fraud flagging

- eBay sellers: cross-checking resale listings

- Lacoste: real-time AI scanners for logo/stitching verification

- OpenAI & Adobe: embedding provenance metadata

- B2B firms: post-deepfake, now require callback-verification for large wire transfers

Still, these are the exceptions. Not the standard.

Why This Matters (Like, Now)

This isn't just about “bad actors.” It’s about structural risk:

- Insurance companies pay out on fake crashes

- Retailers eat the cost of non-existent damage

- Platforms like Airbnb alienate real customers

- Legal systems wrestle with synthetic evidence

And regulators? They’re playing catch-up.

The FTC’s new initiative “Operation AI Comply” is a start — but let’s just say they’re still on level 1 of the AI boss fight.

Takeaways

- Generative AI is now cheap, fast, and good enough to fool real systems

- Platforms must assume images, receipts, and even voices can be faked

- Without hybrid defenses (AI + human), fraud risk will scale exponentially

- If you’re not verifying evidence today, someone will pay for it tomorrow — probably you